Part 1: Basics of Lattice Theory | A Guide to Fully Homomorphic Encryption (FHE)

A smooth introduction to lattice theory

Hello everyone! Welcome to the first post of the fully homomorphic encryption series. In this series, we will start with the basics of lattice theory, then move on to lattice-based cryptography, and finally dive into the fundamentals of fully homomorphic encryption.

Contents

Back to Linear Algebra

Vector Spaces

Bases

Linear Independence

Span

Fundamentals of Lattice Theory

Definition and Properties

Determinant and Volume

SVP & CVP

The Shortest Vector Problem

The Closest Vector Problem

Conclusion

Back to Linear Algebra

Linear algebra is a branch of mathematics concerning linear maps, linear equations, and their representations in vector spaces.

Vector Spaces

We want to formalize the notion of a vector. But it is easier to formalize the notion of a set of vectors together with the operations of:

vector addition,

scalar multiplication.

Definition: Let \(\mathbb{F}\) be a field. Let \(V\) be a set, a binary operation \(+\) on \(V\), and a map \(\cdot\) from \(F \times V \rightarrow V\) such that the following axioms hold:

\(v + w = w + v; \ \forall v,w \in V,\)

\(u + (v + w) = (u + v) + w; \ \forall u, v, w \in V\),

\(\exists \vec{0} \in V\) s.t. \(\vec{0} + v = v; \forall v \in V\),

\(\forall v \in V, \exists-v \in V\) s.t. \(v + (-v) = \vec{0}\),

\(\forall v \in V, 1_{\mathbb{F}} \cdot v = v\),

\(\forall a, b \in \mathbb{F}, \forall v \in V; (a + b) \cdot v = (a \cdot v) + (b \cdot v)\),

\(\forall a \in \mathbb{F}, \forall v, w \in V; a \cdot (v + w) = (a \cdot v) + (a \cdot w)\),

\(\forall a, b \in \mathbb{F}, \forall v \in V; (a \cdot b) \cdot v = a \cdot (b \cdot v)\).

\((V, + , \cdot)\) triple is called a vector space over\(\mathbb{F}\) if it satisfied the axioms above.

Elements of \(V\) are called vectors.

Some Remarks

Vector spaces are commutative.

All vector spaces have an additive identity.

All vectors have inverses within the same vector space.

Both scalar multiplication over vector addition and vector addition over hold.

Axioms (1)-(4) imply that \((V, +)\) is an abelian group.

Examples

\((\mathbb{R}^n, +, \cdot)\) is a vector space over the field \(\mathbb{R}\) for all integer values of \(n\).

Set of functions from any set \(S\) to a vector space with relevant operations is a vector space.

Bases

Linear Independence

Definition: Let \(V\) be a vector space over a field \(\mathbb{F}\). Say \(v_1, v_2, ..., v_n \in V\) and \(c_1, c_2, ..., c_n \in \mathbb{F}\). An expression of the form

$$c_1 \cdot v_1 + c_2 \cdot v_2 \ + ... + \ c_n \cdot v_n$$

is called a linear combination of \(v_1, v_2, ..., v_n\).

Definition: Let \(V\) be a vector space over \(\mathbb{F}\) and \(S\) a non-empty subset of \(V\). Then, \(S\) is called linearly independent if for any distinct \(v_1, v_2, ..., v_n \in S\)

$$c_1 \cdot v_1 + c_2 \cdot v_2 \ + ... + \ c_n \cdot v_n = \vec{0} \ \implies \ c_1 = c_1 = ... = c_n = 0.$$

Span

Let \(V\) be a vector space over \(\mathbb{F}\) and let \(S\) be a non-empty subset of \(V\).

Definition: The span of \(S\) is the following subset of \(V\):

$$Span(S) = \{ c_1 \cdot v_1 \ + ... + \ c_n \cdot v_n \ | \ \exists n; c_1, ..., c_n \in F; v_1, ..., v_n \in S \}.$$

Definition: Let \(V\) be a vector space, \(S\) a subset of \(V\). Then \(S\) is called a basis for V if

\(S\) is linearly independent,

\(Span(S) = V.\)

For example, let us consider the vector space \(V = \mathbb{R}^2\) with the field \(\mathbb{F} = \mathbb{R}\) and claim that \(S = \{ (1, 0), (0, 1) \}\) is a basis, or not.

Proof:

- Linear Independence

Suppose \(c_1 \cdot (1, 0) + c_2 \cdot (0, 1) = \vec{0} = (0, 0)\). Then \((c_1, c_2) = (0, 0)\). Hence \(c_1 = c_2 = 0\). So \(S\) is linearly independent.

- Span

The fact that \(Span(S) \subset V\) is clear. So it is enough to show that \(V \subset Span(S)\). Take \(v \in V = (x, y)\). It can be rewritten in terms of a linear combination of \(S\): \(v = x \cdot (1, 0) + y \cdot (0, 1) \in Span(S)\).

Hence, \(S\) is a basis for \(\mathbb{R}^2.\)

Fundamentals of Lattice Theory

Definition and Properties

Definition: Let \(v_1, v_2, ..., v_n \in \mathbb{R}^m\) be a set of linearly independent vectors. The lattice \(L\), generated by these vectors, is the set of integer linear combinations of these basis vectors \(v_1, v_2, ..., v_n.\) That is,

$$L = \{ a_1v_1 \ + ... + \ a_nv_n \ | \ a_i \in \mathbb{Z} \ \forall i \}.$$

Notes

The integers \(m\) and \(n\) are the dimension and the rank of the lattice, respectively.

If \(m = n\), then \(L\) is called a full-rank lattice.

Notice that \((L, +) < (\mathbb{Z}^n, +)\) and \((L, +) \cong (\mathbb{Z}^n, +).\)

If the coordinates are also integers, then we call them integer lattices, or integral lattices.

Definition: A matrix \(A \in \mathbb{Z}^{n \times n}\) is unimodular if it has a multiplicative inverse in \(\mathbb{Z}^{n \times n}.\)

Definition: If \(B\) and \(B'\) are two basis matrices, then

$$L(B) = L(B')$$

if and only if

$$B' = AB$$

for some unimodular matrix \(A\).

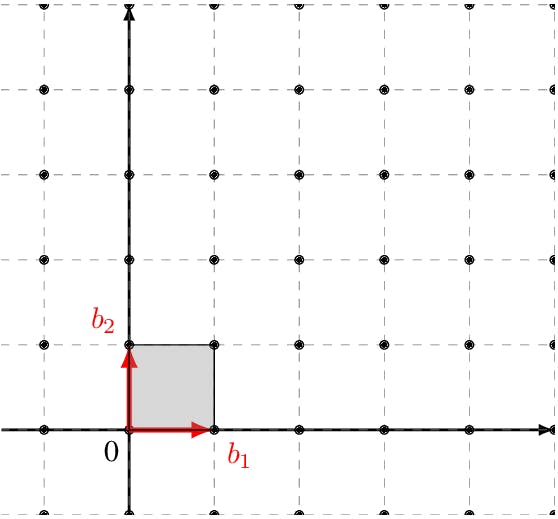

Determinant & Volume

Definition: Let \(L\) be an \(n\)-dimensional lattice with a basis \(\{v_1, v_2, ..., v_n \}.\) Then the fundamental domain of \(L\) is

$$F(v_1, ..., v_n) = \{ t_1v_1 + \ ... \ t_nv_n \ | \ t_i \in [0, 1) \}.$$

Definition: Let \(L\) be an \(n\)-dimensional lattice with a fundamental domain \(F\). Then the \(n\)-dimensional volume of \(F\) is called the determinant of \(L\), denoted by

$$det(L).$$

Proposition: If \(L\) is an \(n\)-dimensional full-rank lattice with a basis \(B = \{ v_1, ..., v_n \}\) and an associated fundamental domain \(F\), then the volume of \(F\) is equal to the absolute value of determinant of the basis. That is,

$$det(L) = Vol(F) = |det(B)|.$$

SVP & CVP

The Shortest Vector Problem

Definition: Given a lattice \(L\), the length of the shortest non-zero vector in \(L\) which is also the minimum distance between any two lattice vectors is defined as

$$\lambda_1(L) = min \{ ||v|| \ | \ v \in L - \{0\} \}$$

$$ \lambda_1(L) = min{ ||x - y|| \ | \ x, y \in L; x \neq y }.$$

The difficulty of SVP lies in the fact that when the dimension increases, it gets exponentially more difficult to find the shortest vector in a lattice.

The Closest Vector Problem

Definition: Given \(B\) and a target vector \(t\) that is not in the lattice \(L(B)\), find a vector in \(L(B)\) that is closest to \(t\), i.e., find a vector \(v \in L(B)\) such that \(\forall w \in L(B)\) it satisfies

$$||v - t|| = ||w - t||.$$

Similar to the SVP, the closest vector problem gets also extremely difficult as the number of dimension gets higher.

Conclusion

In this first post of our series on fully homomorphic encryption, we've discussed the foundational knowledge necessary for understanding the world of lattice-based cryptography. Starting with the essentials of linear algebra, we explored the concepts of vector spaces and bases, which are crucial for grasping the underlying principles of lattice theory.

We then delved into the fundamentals of lattice theory itself, discussing its definition, properties, and the significance of determinants and volume in the context of lattices. Additionally, we introduced two pivotal problems in lattice theory: the Shortest Vector Problem (SVP) and the Closest Vector Problem (CVP), highlighting their computational challenges and importance in cryptography.

I'm aiming to make this series as pedagogical as possible for anyone with a technical background. Stay tuned for the next post where we will be discussing Learning with Errors (LWE) problem.